issues

5 rows where user = 64621312 sorted by updated_at descending

This data as json, CSV (advanced)

Suggested facets: locked, comments, state_reason, created_at (date), updated_at (date), closed_at (date)

| id | node_id | number | title | user | state | locked | assignee | milestone | comments | created_at | updated_at ▲ | closed_at | author_association | active_lock_reason | draft | pull_request | body | reactions | performed_via_github_app | state_reason | repo | type |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1340994913 | I_kwDOAMm_X85P7fVh | 6924 | Memory Leakage Issue When Running to_netcdf | lassiterdc 64621312 | closed | 0 | 2 | 2022-08-16T23:58:17Z | 2023-01-17T18:38:40Z | 2023-01-17T18:38:40Z | NONE | What is your issue?I have a zarr file that I'd like to convert to a netcdf which is too large to fit in memory. My computer has 32GB of RAM so writing ~5.5GB chunks shouldn't be a problem. However, within seconds of running this script, my memory usage quickly tops out consuming the available ~20GB and the script fails. Data: Dropbox link to zarr file containing radar rainfall data for 6/28/2014 over the United States that is around 1.8GB in total. Code: ```python import xarray as xr import zarr fpath_zarr = "out_zarr_20140628.zarr" ds_from_zarr = xr.open_zarr(store=fpath_zarr, chunks={'outlat':3500, 'outlon':7000, 'time':30}) ds_from_zarr.to_netcdf("ds_zarr_to_nc.nc", encoding= {"rainrate":{"zlib":True}}) ``` Outputs:

Package versions:

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/6924/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 1368696980 | I_kwDOAMm_X85RlKiU | 7018 | Writing netcdf after running xarray.dataset.reindex to fill gaps in a time series fails due to memory allocation error | lassiterdc 64621312 | open | 0 | 3 | 2022-09-10T18:21:48Z | 2022-09-15T19:59:39Z | NONE | Problem SummaryI am attempting to convert a.grib2 file representing a single day's worth of gridded radar rainfall data spanning the continental US, into a netcdf. When a .grib2 is missing timesteps, I am attempting to fill them in with NA values using Accessing Full Reproducible ExampleThe code and data to reproduce my results can be downloaded from this Dropbox link. The code is also shown below followed by the outputs. Potentially relevant OS and environment information are shown below as well. Code```python %% Import librariesimport time start_time = time.time() import xarray as xr import cfgrib from glob import glob import pandas as pd import dask dask.config.set(**{'array.slicing.split_large_chunks': False}) # to silence warnings of loading large slice into memory dask.config.set(scheduler='synchronous') # this forces single threaded computations (netcdfs can only be written serially) %% parameterschnk_sz = "7000MB" fl_out_nc = "out_netcdfs/20010101.nc" fldr_in_grib = "in_gribs/20010101.grib2" %% loading and exporting datasetds = xr.open_dataset(fldr_in_grib, engine="cfgrib", chunks={"time":chnk_sz}, backend_kwargs={'indexpath': ''}) reindexstart_date = pd.to_datetime('2001-01-01') tstep = pd.Timedelta('0 days 00:05:00') new_index = pd.date_range(start=start_date, end=start_date + pd.Timedelta(1, "day"),\ freq=tstep, inclusive='left') ds = ds.reindex(indexers={"time":new_index}) ds = ds.unify_chunks() ds = ds.chunk(chunks={'time':chnk_sz}) print("######## INSPECTING DATASET PRIOR TO WRITING TO NETCDF ########") print(ds) print(' ') print("######## ERROR MESSAGE ########") ds.to_netcdf(fl_out_nc, encoding= {"unknown":{"zlib":True}}) ``` Outputs``` ## INSPECTING DATASET PRIOR TO WRITING TO NETCDF<xarray.Dataset> Dimensions: (time: 288, latitude: 3500, longitude: 7000) Coordinates: * time (time) datetime64[ns] 2001-01-01 ... 2001-01-01T23:55:00 * latitude (latitude) float64 54.99 54.98 54.98 54.97 ... 20.03 20.02 20.01 * longitude (longitude) float64 230.0 230.0 230.0 ... 300.0 300.0 300.0 step timedelta64[ns] ... surface float64 ... valid_time (time) datetime64[ns] dask.array<chunksize=(288,), meta=np.ndarray> Data variables: unknown (time, latitude, longitude) float32 dask.array<chunksize=(70, 3500, 7000), meta=np.ndarray> Attributes: GRIB_edition: 2 GRIB_centre: 161 GRIB_centreDescription: 161 GRIB_subCentre: 0 Conventions: CF-1.7 institution: 161 history: 2022-09-10T14:50 GRIB to CDM+CF via cfgrib-0.9.1... ## ERROR MESSAGEOutput exceeds the size limit. Open the full output data in a text editorMemoryError Traceback (most recent call last) d:\Dropbox_Sharing\reprex\2022-9-9_writing_ncdf_fails\reprex\exporting_netcdfs_reduced.py in <cell line: 22>() 160 print(' ') 161 print("######## ERROR MESSAGE ########") ---> 162 ds.to_netcdf(fl_out_nc, encoding= {"unknown":{"zlib":True}}) File c:\Users\Daniel\anaconda3\envs\weather_gen_3\lib\site-packages\xarray\core\dataset.py:1882, in Dataset.to_netcdf(self, path, mode, format, group, engine, encoding, unlimited_dims, compute, invalid_netcdf) 1879 encoding = {} 1880 from ..backends.api import to_netcdf -> 1882 return to_netcdf( # type: ignore # mypy cannot resolve the overloads:( 1883 self, 1884 path, 1885 mode=mode, 1886 format=format, 1887 group=group, 1888 engine=engine, 1889 encoding=encoding, 1890 unlimited_dims=unlimited_dims, 1891 compute=compute, 1892 multifile=False, 1893 invalid_netcdf=invalid_netcdf, 1894 ) File c:\Users\xxxxx\anaconda3\envs\weather_gen_3\lib\site-packages\xarray\backends\api.py:1219, in to_netcdf(dataset, path_or_file, mode, format, group, engine, encoding, unlimited_dims, compute, multifile, invalid_netcdf) ... 121 return arg File <array_function internals>:180, in where(args, *kwargs) MemoryError: Unable to allocate 19.2 GiB for an array with shape (210, 3500, 7000) and data type float32 ``` Environment

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/7018/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

reopened | xarray 13221727 | issue | |||||||

| 1340474484 | I_kwDOAMm_X85P5gR0 | 6920 | Writing a netCDF file is slow | lassiterdc 64621312 | closed | 1 | 3 | 2022-08-16T14:48:37Z | 2022-08-16T17:05:37Z | 2022-08-16T17:05:37Z | NONE | What is your issue?This has been discussed in another thread, but the proposed solution there (first Data: dropbox link to 717 netcdf files containing radar rainfall data for 6/28/2014 over the United States that is around 1GB in total. Code: ```python %% Import librariesimport xarray as xr from glob import glob import pandas as pd import time import dask dask.config.set(**{'array.slicing.split_large_chunks': False}) files = glob("data/*.nc") %% functionsdef extract_file_timestep(fname): fname = fname.split('/')[-1] fname = fname.split(".") ftype = fname.pop(-1) fname = ''.join(fname) str_tstep = fname.split("_")[-1] if ftype == "nc": date_format = '%Y%m%d%H%M' if ftype == "grib2": date_format = '%Y%m%d-%H%M%S' def ds_preprocessing(ds): tstamp = extract_file_timestep(ds.encoding['source']) ds.coords["time"] = tstamp ds = ds.expand_dims({"time":1}) ds = ds.rename({"lon":"longitude", "lat":"latitude", "mrms_a2m":"rainrate"}) ds = ds.chunk(chunks={"latitude":3500, "longitude":7000, "time":1}) return ds %% Loading and formatting datalst_ds = [] start_time = time.time() for f in files: ds = xr.open_dataset(f, chunks={"latitude":3500, "longitude":7000}) ds = ds_preprocessing(ds) lst_ds.append(ds) ds_comb_frm_lst = xr.concat(lst_ds, dim="time") print("Time to load dataset using concat on list of datasets: {}".format(time.time() - start_time)) start_time = time.time() ds_comb_frm_open_mfdataset = xr.open_mfdataset(files, chunks={"latitude":3500, "longitude":7000}, concat_dim = "time", preprocess=ds_preprocessing, combine="nested") print("Time to load dataset using open_mfdataset: {}".format(time.time() - start_time)) %% exporting to netcdfstart_time = time.time() ds_comb_frm_lst.to_netcdf("ds_comb_frm_lst.nc", encoding= {"rainrate":{"zlib":True}}) print("Time to export dataset created using concat on list of datasets: {}".format(time.time() - start_time)) start_time = time.time() ds_comb_frm_open_mfdataset.to_netcdf("ds_comb_frm_open_mfdataset.nc", encoding= {"rainrate":{"zlib":True}}) print("Time to export dataset created using open_mfdataset: {}".format(time.time() - start_time)) ``` |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/6920/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

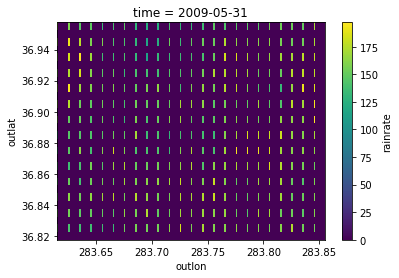

| 1332143835 | I_kwDOAMm_X85PZubb | 6892 | 2 Dimension Plot Producing Discontinuous Grid | lassiterdc 64621312 | closed | 0 | 1 | 2022-08-08T16:59:14Z | 2022-08-08T17:12:41Z | 2022-08-08T17:11:44Z | NONE | What is your issue?Problem: I'm expecting a plot that looks like the one here (Plotting-->Two Dimensions-->Simple Example) with a continuous grid, but instead I'm getting the plot below which has a discontinuous grid. This could be due to different spacing in the x and y dimensions (0.005 spacing in the Reprex:

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/6892/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 1308176241 | I_kwDOAMm_X85N-S9x | 6805 | PermissionError: [Errno 13] Permission denied | lassiterdc 64621312 | closed | 0 | 5 | 2022-07-18T16:05:31Z | 2022-07-18T17:58:38Z | 2022-07-18T17:58:38Z | NONE | What is your issue?This was raised about a year ago but still seems to be unresolved, so I'm hoping this will bring attention back to the issue. (https://github.com/pydata/xarray/issues/5488) Data: dropbox sharing link Description: This folder contains 2 files each containing 1 day's worth of 1kmx1km gridded precipitation rate data from the National Severe Storms Laboratory. Each is about a gig (sorry they're so big, but it's what I'm working with!) Code: ```python import xarray as xr f_in_ncs = "data/" f_in_nc = "data/20190520.nc" %% worksds = xr.open_dataset(f_in_nc, chunks={'outlat':3500, 'outlon':7000, 'time':50}) %% doesn't workmf_ds = xr.open_mfdataset(f_in_ncs, concat_dim = "time",

chunks={'outlat':3500, 'outlon':7000, 'time':50},

combine = "nested", engine = 'netcdf4')

KeyError Traceback (most recent call last) File c:\Users\Daniel\anaconda3\envs\mrms\lib\site-packages\xarray\backends\file_manager.py:199, in CachingFileManager._acquire_with_cache_info(self, needs_lock) 198 try: --> 199 file = self._cache[self._key] 200 except KeyError: File c:\Users\Daniel\anaconda3\envs\mrms\lib\site-packages\xarray\backends\lru_cache.py:53, in LRUCache.getitem(self, key) 52 with self._lock: ---> 53 value = self._cache[key] 54 self._cache.move_to_end(key) KeyError: [<class 'netCDF4._netCDF4.Dataset'>, ('d:\mrms_processing\_reprex\2022-7-18_open_mfdataset\data',), 'r', (('clobber', True), ('diskless', False), ('format', 'NETCDF4'), ('persist', False))] During handling of the above exception, another exception occurred: PermissionError Traceback (most recent call last) Input In [4], in <cell line: 5>() 1 import xarray as xr 3 f_in_ncs = "data/" ----> 5 ds = xr.open_mfdataset(f_in_ncs, concat_dim = "time", 6 chunks={'outlat':3500, 'outlon':7000, 'time':50}, 7 combine = "nested", engine = 'netcdf4') File c:\Users\Daniel\anaconda3\envs\mrms\lib\site-packages\xarray\backends\api.py:908, in open_mfdataset(paths, chunks, concat_dim, compat, preprocess, engine, data_vars, coords, combine, parallel, join, attrs_file, combine_attrs, **kwargs) ... File src\netCDF4_netCDF4.pyx:2307, in netCDF4._netCDF4.Dataset.init() File src\netCDF4_netCDF4.pyx:1925, in netCDF4._netCDF4._ensure_nc_success() PermissionError: [Errno 13] Permission denied: b'd:\mrms_processing\_reprex\2022-7-18_open_mfdataset\data' ``` |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/6805/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issues] (

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[number] INTEGER,

[title] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[state] TEXT,

[locked] INTEGER,

[assignee] INTEGER REFERENCES [users]([id]),

[milestone] INTEGER REFERENCES [milestones]([id]),

[comments] INTEGER,

[created_at] TEXT,

[updated_at] TEXT,

[closed_at] TEXT,

[author_association] TEXT,

[active_lock_reason] TEXT,

[draft] INTEGER,

[pull_request] TEXT,

[body] TEXT,

[reactions] TEXT,

[performed_via_github_app] TEXT,

[state_reason] TEXT,

[repo] INTEGER REFERENCES [repos]([id]),

[type] TEXT

);

CREATE INDEX [idx_issues_repo]

ON [issues] ([repo]);

CREATE INDEX [idx_issues_milestone]

ON [issues] ([milestone]);

CREATE INDEX [idx_issues_assignee]

ON [issues] ([assignee]);

CREATE INDEX [idx_issues_user]

ON [issues] ([user]);