issues

28 rows where comments = 6 and user = 2448579 sorted by updated_at descending

This data as json, CSV (advanced)

Suggested facets: created_at (date), updated_at (date), closed_at (date)

| id | node_id | number | title | user | state | locked | assignee | milestone | comments | created_at | updated_at ▲ | closed_at | author_association | active_lock_reason | draft | pull_request | body | reactions | performed_via_github_app | state_reason | repo | type |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2021858121 | PR_kwDOAMm_X85g81wJ | 8510 | Grouper object design doc | dcherian 2448579 | closed | 0 | 6 | 2023-12-02T04:56:54Z | 2024-03-06T02:27:07Z | 2024-03-06T02:27:04Z | MEMBER | 0 | pydata/xarray/pulls/8510 | xref #8509, #6610 Rendered version here @pydata/xarray I've been poking at this on and off for a year now and finally figured out how to do it cleanly (#8509). I wrote up a design doc for 8509 implements two custom Groupers for you to try out :)```python import xarray as xr from xarray.core.groupers import SeasonGrouper, SeasonResampler ds = xr.tutorial.open_dataset("air_temperature") custom seasons!ds.air.groupby(time=SeasonGrouper(["JF", "MAM", "JJAS", "OND"])).mean() ds.air.resample(time=SeasonResampler(["DJF", "MAM", "JJAS", "ON"])).count() ``` All comments are welcome,

1. there are a couple of specific API and design decisions to be made. I'll make some comments pointing these out.

2. I'm also curious about what cc @ilan-gold @ivirshup @aulemahal @tomvothecoder @jbusecke @katiedagon - it would be good to hear what "Groupers" would be useful for your work / projects. I bet you already have examples that fit this proposal |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/8510/reactions",

"total_count": 8,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 8,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 1942666419 | PR_kwDOAMm_X85cxojc | 8304 | Move Variable aggregations to NamedArray | dcherian 2448579 | closed | 0 | 6 | 2023-10-13T21:31:01Z | 2023-11-06T04:25:43Z | 2023-10-17T19:14:12Z | MEMBER | 0 | pydata/xarray/pulls/8304 |

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/8304/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 1908084109 | I_kwDOAMm_X85xuw2N | 8223 | release 2023.09.0 | dcherian 2448579 | closed | 0 | 6 | 2023-09-22T02:29:30Z | 2023-09-26T08:12:46Z | 2023-09-26T08:12:46Z | MEMBER | We've accumulated a nice number of changes. Can someone volunteer to do a release in the next few days? |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/8223/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 756425955 | MDU6SXNzdWU3NTY0MjU5NTU= | 4648 | Comprehensive benchmarking suite | dcherian 2448579 | open | 0 | 6 | 2020-12-03T18:01:57Z | 2023-06-15T16:56:00Z | MEMBER | I think a good "infrastructure" target for the NASA OSS call would be to expand our benchmarking suite (https://pandas.pydata.org/speed/xarray/#/) AFAIK running these in a useful manner on CI is still unsolved (please correct me if I'm wrong). But we can always run it on an NCAR machine using a cron job. Thoughts? cc @scottyhq A quick survey of work needed (please append): - [ ] indexing & slicing #3382 #2799 #2227 - [ ] DataArray construction #4744 - [ ] attribute access #4741, #4742 - [ ] property access #3514 - [ ] reindexing? https://github.com/pydata/xarray/issues/1385#issuecomment-297539517 - [x] alignment #3755, #7738 - [ ] assignment #1771 - [ ] coarsen - [x] groupby #659 #7795 #7796 - [x] resample #4498 #7795 - [ ] weighted #4482 #3883 - [ ] concat #7824 - [ ] merge - [ ] open_dataset, open_mfdataset #1823 - [ ] stack / unstack - [ ] apply_ufunc? - [x] interp #4740 #7843 - [ ] reprs #4744 - [x] to_(dask)_dataframe #7844 #7474 Related: #3514 |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/4648/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | issue | ||||||||

| 1236174701 | I_kwDOAMm_X85Jrodt | 6610 | Update GroupBy constructor for grouping by multiple variables, dask arrays | dcherian 2448579 | open | 0 | 6 | 2022-05-15T03:17:54Z | 2023-04-26T16:06:17Z | MEMBER | What is your issue?

To enable this in GroupBy we need to update the constructor's signature to

1. Accept multiple "by" variables.

2. Accept "expected group labels" for grouping by dask variables (like The signature in flox is (may be errors!)

You would calculate that last example using flox as

The use of I propose we update groupby's signature to

1. change So then that example becomes

Thoughts? |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/6610/reactions",

"total_count": 7,

"+1": 7,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | issue | ||||||||

| 809366777 | MDExOlB1bGxSZXF1ZXN0NTc0MjQxMTE3 | 4915 | Better rolling reductions | dcherian 2448579 | closed | 0 | 6 | 2021-02-16T14:37:49Z | 2023-04-13T15:46:18Z | 2021-02-19T19:44:04Z | MEMBER | 0 | pydata/xarray/pulls/4915 | Implements most of https://github.com/pydata/xarray/issues/4325#issuecomment-716399575 ``` python %load_ext memory_profiler import numpy as np import xarray as xr temp = xr.DataArray(np.zeros((5000, 500)), dims=("x", "y")) roll = temp.rolling(x=10, y=20) %memit roll.sum()

%memit roll.reduce(np.sum)

%memit roll.reduce(np.nansum) # master branch behaviour

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/4915/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 1642317716 | I_kwDOAMm_X85h48eU | 7685 | Add welcome bot? | dcherian 2448579 | closed | 0 | 6 | 2023-03-27T15:24:25Z | 2023-04-06T01:55:55Z | 2023-04-06T01:55:55Z | MEMBER | Is your feature request related to a problem?Given all the outreachy interest (and perhaps just in general) it may be nice to enable a welcome bot like on the Jupyter repos Describe the solution you'd likeNo response Describe alternatives you've consideredNo response Additional contextNo response |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/7685/reactions",

"total_count": 3,

"+1": 3,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 1327380960 | PR_kwDOAMm_X848lec7 | 6874 | Avoid calling np.asarray on lazy indexing classes | dcherian 2448579 | closed | 0 | 6 | 2022-08-03T15:13:00Z | 2023-03-31T15:15:31Z | 2023-03-31T15:15:29Z | MEMBER | 0 | pydata/xarray/pulls/6874 | This is motivated by https://docs.rapids.ai/api/kvikio/stable/api.html#kvikio.zarr.GDSStore which on read loads the data directly into GPU memory. Currently we rely on

Here I added Quite a few things are broken I think , but I'd like feedback on the approach. I considered Ref: https://github.com/xarray-contrib/cupy-xarray/pull/10 which adds a |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/6874/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 984555353 | MDU6SXNzdWU5ODQ1NTUzNTM= | 5754 | Variable.stack constructs extremely large chunks | dcherian 2448579 | closed | 0 | 6 | 2021-09-01T03:08:02Z | 2023-03-22T14:51:44Z | 2021-12-14T17:31:45Z | MEMBER | Minimal Complete Verifiable Example: Here's a small array with too-small chunk sizes just as an example ```python Put your MCVE code hereimport dask.array import xarray as xr var = xr.Variable(("x", "y", "z"), dask.array.random.random((4, 18483, 1000), chunks=(1, 183, -1)))

```

Now stack two dimensions, this is a 100x increase in chunk size (in my actual code, 85MB chunks become 8.5GB chunks =) )

But calling SolutionAh, found it , we transpose then reshape in Writing those steps with pure dask yields the same 100x increase in chunksize

Anything else we need to know?: Environment: Output of <tt>xr.show_versions()</tt>INSTALLED VERSIONS ------------------ commit: None python: 3.8.6 | packaged by conda-forge | (default, Jan 25 2021, 23:21:18) [GCC 9.3.0] python-bits: 64 OS: Linux OS-release: 3.10.0-1127.18.2.el7.x86_64 machine: x86_64 processor: x86_64 byteorder: little LC_ALL: en_US.UTF-8 LANG: en_US.UTF-8 LOCALE: ('en_US', 'UTF-8') libhdf5: 1.10.6 libnetcdf: 4.7.4 xarray: 0.19.0 pandas: 1.3.1 numpy: 1.21.1 scipy: 1.5.3 netCDF4: 1.5.6 pydap: installed h5netcdf: 0.11.0 h5py: 3.3.0 Nio: None zarr: 2.8.3 cftime: 1.5.0 nc_time_axis: 1.3.1 PseudoNetCDF: None rasterio: None cfgrib: None iris: 3.0.4 bottleneck: 1.3.2 dask: 2021.07.2 distributed: 2021.07.2 matplotlib: 3.4.2 cartopy: 0.19.0.post1 seaborn: 0.11.1 numbagg: None pint: 0.17 setuptools: 49.6.0.post20210108 pip: 21.2.2 conda: 4.10.3 pytest: 6.2.4 IPython: 7.26.0 sphinx: 4.1.2 |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/5754/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 344614881 | MDU6SXNzdWUzNDQ2MTQ4ODE= | 2313 | Example on using `preprocess` with `mfdataset` | dcherian 2448579 | open | 0 | 6 | 2018-07-25T21:31:34Z | 2023-03-14T12:35:00Z | MEMBER | I wrote this little notebook today while trying to get some satellite data in form that was nice to work with: https://gist.github.com/dcherian/66269bc2b36c2bc427897590d08472d7 I think it would make a useful example for the docs. A few questions: 1. Do you think it'd be a good addition to the examples? 2. Is this the recommended way of adding meaningful co-ordinates, expanding dims etc.? The main bit is this function: ``` def preprocess(ds): ``` Also open to other feedback... |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/2313/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | issue | ||||||||

| 1321228754 | I_kwDOAMm_X85OwFnS | 6845 | Do we need to update AbstractArray for duck arrays? | dcherian 2448579 | open | 0 | 6 | 2022-07-28T16:59:59Z | 2022-07-29T17:20:39Z | MEMBER | What happened?I'm calling Traceback below:

```

--> 25 a = _core.array(a, copy=False)

26 return a.round(decimals, out=out)

27

cupy/_core/core.pyx in cupy._core.core.array()

cupy/_core/core.pyx in cupy._core.core.array()

cupy/_core/core.pyx in cupy._core.core._array_default()

~/miniconda3/envs/gpu/lib/python3.7/site-packages/xarray/core/common.py in __array__(self, dtype)

146

147 def __array__(self: Any, dtype: DTypeLike = None) -> np.ndarray:

--> 148 return np.asarray(self.values, dtype=dtype)

149

150 def __repr__(self) -> str:

~/miniconda3/envs/gpu/lib/python3.7/site-packages/xarray/core/dataarray.py in values(self)

644 type does not support coercion like this (e.g. cupy).

645 """

--> 646 return self.variable.values

647

648 @values.setter

~/miniconda3/envs/gpu/lib/python3.7/site-packages/xarray/core/variable.py in values(self)

517 def values(self):

518 """The variable's data as a numpy.ndarray"""

--> 519 return _as_array_or_item(self._data)

520

521 @values.setter

~/miniconda3/envs/gpu/lib/python3.7/site-packages/xarray/core/variable.py in _as_array_or_item(data)

257 TODO: remove this (replace with np.asarray) once these issues are fixed

258 """

--> 259 data = np.asarray(data)

260 if data.ndim == 0:

261 if data.dtype.kind == "M":

cupy/_core/core.pyx in cupy._core.core.ndarray.__array__()

TypeError: Implicit conversion to a NumPy array is not allowed. Please use `.get()` to construct a NumPy array explicitly.

```

What did you expect to happen?Not an error? I'm not sure what's expected

My question is : Do we need to update Minimal Complete Verifiable ExampleNo response MVCE confirmation

Relevant log outputNo response Anything else we need to know?No response Environment

xarray v2022.6.0

cupy 10.6.0

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/6845/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | issue | ||||||||

| 1290524064 | I_kwDOAMm_X85M69Wg | 6741 | some private imports broken on main | dcherian 2448579 | closed | 0 | 6 | 2022-06-30T18:59:28Z | 2022-07-06T03:06:31Z | 2022-07-06T03:06:31Z | MEMBER | What happened?Seen over in cf_xarray Using Now we need to use I don't know if this is something that needs to be fixed or only worked coincidentally earlier. But I thought it was worth discussing prior to release. Thanks to @aulemahal for spotting |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/6741/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 1258338848 | I_kwDOAMm_X85LALog | 6659 | Publish nightly releases to TestPyPI | dcherian 2448579 | closed | 0 | 6 | 2022-06-02T15:21:24Z | 2022-06-07T08:37:02Z | 2022-06-06T22:33:15Z | MEMBER | Is your feature request related to a problem?From @keewis in #6645

Describe the solution you'd likeNo response Describe alternatives you've consideredNo response Additional contextNo response |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/6659/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 584461380 | MDU6SXNzdWU1ODQ0NjEzODA= | 3868 | What should pad do about IndexVariables? | dcherian 2448579 | open | 0 | 6 | 2020-03-19T14:40:21Z | 2022-02-22T16:02:21Z | MEMBER | Currently We need to think about 1. Int, Float, Datetime64, CFTime indexes: linearly extrapolate? Should we care whether the index is sorted or not? (I think not) 2. MultiIndexes: ?? 3. CategoricalIndexes: ?? 4. Unindexed dimensions EDIT: Added unindexed dimensions |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/3868/reactions",

"total_count": 6,

"+1": 6,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | issue | ||||||||

| 1038376514 | PR_kwDOAMm_X84tyjh3 | 5903 | Explicitly list all reductions in api.rst | dcherian 2448579 | closed | 0 | 6 | 2021-10-28T10:58:02Z | 2021-11-03T16:55:20Z | 2021-11-03T16:55:19Z | MEMBER | 0 | pydata/xarray/pulls/5903 | This PR makes a number of updates to list all reduction methods in their own subsection under "Dataset" and "DataArray".before

after

List reductions for groupby etcI've also added sections for rolling, coarsen, groupby, resample that again list available reductions. These were previously hidden

Class method names are much more readable.Before

after

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/5903/reactions",

"total_count": 3,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 3,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 985376146 | MDU6SXNzdWU5ODUzNzYxNDY= | 5758 | RTD build failing | dcherian 2448579 | closed | 0 | 6 | 2021-09-01T16:50:58Z | 2021-09-08T09:47:17Z | 2021-09-08T09:47:16Z | MEMBER | The current RTD build is failing in

Sphinx parallel build error:

RuntimeError: Non Expected exception in |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/5758/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 549069589 | MDExOlB1bGxSZXF1ZXN0MzYyMjMwMjk0 | 3691 | Implement GroupBy.__getitem__ | dcherian 2448579 | closed | 0 | 6 | 2020-01-13T17:15:23Z | 2021-03-15T11:49:35Z | 2021-03-15T10:20:37Z | MEMBER | 0 | pydata/xarray/pulls/3691 |

Just like the pandas method. |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/3691/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 771295760 | MDU6SXNzdWU3NzEyOTU3NjA= | 4713 | mamba on RTD | dcherian 2448579 | closed | 0 | 6 | 2020-12-19T03:43:50Z | 2020-12-21T21:19:47Z | 2020-12-21T21:19:47Z | MEMBER | RTD now supports using mamba instead of conda using a feature flag : https://docs.readthedocs.io/en/latest/guides/feature-flags.html#available-flags Maybe it's worth seeing if this will reduce docs build time on CI? |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/4713/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 650236784 | MDExOlB1bGxSZXF1ZXN0NDQzNzY5MDc1 | 4195 | Propagate attrs with unary, binary functions | dcherian 2448579 | closed | 0 | 6 | 2020-07-02T22:31:42Z | 2020-10-14T16:29:57Z | 2020-10-14T16:29:52Z | MEMBER | 0 | pydata/xarray/pulls/4195 | Closes #3490 Closes #4065 Closes #3433 Closes #3595

Still needs work but I thought I'd get opinions on defaults and the approach. |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/4195/reactions",

"total_count": 3,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 530657789 | MDExOlB1bGxSZXF1ZXN0MzQ3Mjc5Mjg2 | 3584 | Make dask names change when chunking Variables by different amounts. | dcherian 2448579 | closed | 0 | 6 | 2019-12-01T02:18:52Z | 2020-01-10T16:11:04Z | 2020-01-10T16:10:57Z | MEMBER | 0 | pydata/xarray/pulls/3584 | When rechunking by the current chunk size, name should not change.

Add a

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/3584/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 527296094 | MDU6SXNzdWU1MjcyOTYwOTQ= | 3563 | environment file for binderized examples | dcherian 2448579 | closed | 0 | 6 | 2019-11-22T16:33:11Z | 2019-11-25T15:57:19Z | 2019-11-25T15:57:19Z | MEMBER | Our examples now have binder links! e.g. https://xarray.pydata.org/en/latest/examples/ERA5-GRIB-example.html but this is suboptimal because we aren't defining an environment so the only things that get installed are the packages that xarray requires : numpy & pandas. We need to at least add cfgrid, matplotlib, cftime, netCDF4 to get all the examples to work out of the box. |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/3563/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 510937756 | MDExOlB1bGxSZXF1ZXN0MzMxMjI2Mjg0 | 3435 | Deprecate allow_lazy | dcherian 2448579 | closed | 0 | 6 | 2019-10-22T21:44:20Z | 2019-11-13T15:48:50Z | 2019-11-13T15:48:46Z | MEMBER | 0 | pydata/xarray/pulls/3435 |

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/3435/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 519338791 | MDExOlB1bGxSZXF1ZXN0MzM4MDk4Mzg2 | 3491 | fix pandas-dev tests | dcherian 2448579 | closed | 0 | 6 | 2019-11-07T15:30:29Z | 2019-11-08T15:33:11Z | 2019-11-08T15:33:07Z | MEMBER | 0 | pydata/xarray/pulls/3491 |

This PR makes Not sure what we want to do about these attributes in the long term. One option would be to pop the |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/3491/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 374849806 | MDExOlB1bGxSZXF1ZXN0MjI2NDMxNjM0 | 2524 | Deprecate inplace | dcherian 2448579 | closed | 0 | 6 | 2018-10-29T03:59:34Z | 2019-08-15T15:33:10Z | 2018-11-03T21:24:13Z | MEMBER | 0 | pydata/xarray/pulls/2524 |

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/2524/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 464813332 | MDExOlB1bGxSZXF1ZXN0Mjk1MDExOTEw | 3086 | Add broadcast_like. | dcherian 2448579 | closed | 0 | 6 | 2019-07-06T03:38:00Z | 2019-07-14T21:14:57Z | 2019-07-14T20:24:32Z | MEMBER | 0 | pydata/xarray/pulls/3086 |

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/3086/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull | |||||

| 466444738 | MDU6SXNzdWU0NjY0NDQ3Mzg= | 3091 | auto_combine warnings with open_mfdataset | dcherian 2448579 | closed | 0 | 6 | 2019-07-10T18:03:33Z | 2019-07-12T15:43:32Z | 2019-07-12T15:43:32Z | MEMBER | I'm seeing this warning when using I don't think we should be issuing this warning when using |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/3091/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

| 446725224 | MDU6SXNzdWU0NDY3MjUyMjQ= | 2977 | 0.12.2 release | dcherian 2448579 | closed | 0 | 6 | 2019-05-21T16:55:49Z | 2019-06-30T03:46:38Z | 2019-06-30T03:46:38Z | MEMBER | Seems like we're close to a release with some nice features. The following PRs are either done or are really close: Features:

indexes:

Bugfixes:

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/2977/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | xarray 13221727 | issue | ||||||

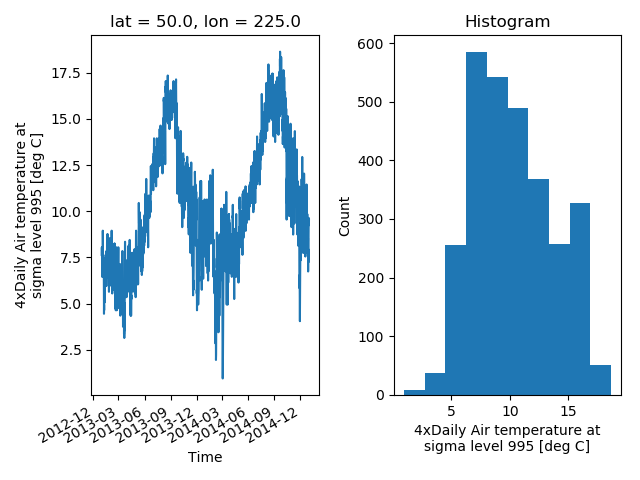

| 324099923 | MDExOlB1bGxSZXF1ZXN0MTg4Nzk1OTE3 | 2151 | Plot labels use CF convention information if available. | dcherian 2448579 | closed | 0 | 6 | 2018-05-17T16:36:04Z | 2018-06-02T00:53:14Z | 2018-06-02T00:10:26Z | MEMBER | 0 | pydata/xarray/pulls/2151 | Uses attrs long_name/standard_name, units if available.

This PR basically uses @rabernat's Examples:

I also changed the way histograms are labelled

Old:

New:

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/2151/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | pull |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issues] (

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[number] INTEGER,

[title] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[state] TEXT,

[locked] INTEGER,

[assignee] INTEGER REFERENCES [users]([id]),

[milestone] INTEGER REFERENCES [milestones]([id]),

[comments] INTEGER,

[created_at] TEXT,

[updated_at] TEXT,

[closed_at] TEXT,

[author_association] TEXT,

[active_lock_reason] TEXT,

[draft] INTEGER,

[pull_request] TEXT,

[body] TEXT,

[reactions] TEXT,

[performed_via_github_app] TEXT,

[state_reason] TEXT,

[repo] INTEGER REFERENCES [repos]([id]),

[type] TEXT

);

CREATE INDEX [idx_issues_repo]

ON [issues] ([repo]);

CREATE INDEX [idx_issues_milestone]

ON [issues] ([milestone]);

CREATE INDEX [idx_issues_assignee]

ON [issues] ([assignee]);

CREATE INDEX [idx_issues_user]

ON [issues] ([user]);