issues: 860418546

This data as json

| id | node_id | number | title | user | state | locked | assignee | milestone | comments | created_at | updated_at | closed_at | author_association | active_lock_reason | draft | pull_request | body | reactions | performed_via_github_app | state_reason | repo | type |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 860418546 | MDU6SXNzdWU4NjA0MTg1NDY= | 5179 | N-dimensional boolean indexing | 1200058 | open | 0 | 6 | 2021-04-17T14:07:48Z | 2021-07-16T17:30:45Z | NONE | Currently, the docs state that boolean indexing is only possible with 1-dimensional arrays: http://xarray.pydata.org/en/stable/indexing.html However, I often have the case where I'd like to convert a subset of an xarray to a dataframe.

Usually, I would call e.g.:

However, this approach is incredibly slow and memory-demanding, since it creates a MultiIndex of every possible coordinate in the array. Describe the solution you'd like

A better approach would be to directly allow index selection with the boolean array:

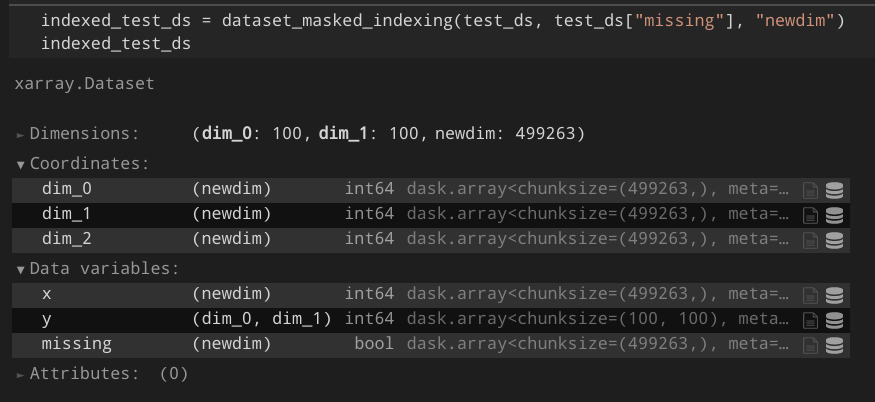

Additional context I created a proof-of-concept that works for my projects: https://gist.github.com/Hoeze/c746ea1e5fef40d99997f765c48d3c0d Some important lines are those: ```python def core_dim_locs_from_cond(cond, new_dim_name, core_dims=None) -> List[Tuple[str, xr.DataArray]]: [...] core_dim_locs = np.argwhere(cond.data) if isinstance(core_dim_locs, dask.array.core.Array): core_dim_locs = core_dim_locs.persist().compute_chunk_sizes() def subset_variable(variable, core_dim_locs, new_dim_name, mask=None): [...] subset = dask.array.asanyarray(variable.data)[mask] # force-set chunk size from known chunks chunk_sizes = core_dim_locs[0][1].chunks[0] subset._chunks = (chunk_sizes, *subset._chunks[1:]) ``` As a result, I would expect something like this:

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/5179/reactions",

"total_count": 2,

"+1": 2,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

13221727 | issue |