issues: 809853736

This data as json

| id | node_id | number | title | user | state | locked | assignee | milestone | comments | created_at | updated_at | closed_at | author_association | active_lock_reason | draft | pull_request | body | reactions | performed_via_github_app | state_reason | repo | type |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

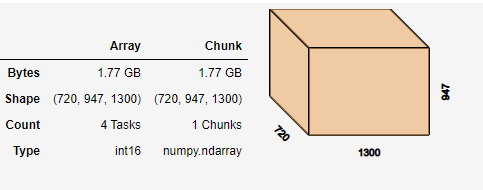

| 809853736 | MDU6SXNzdWU4MDk4NTM3MzY= | 4918 | memoryview is too large saving 1.77GB Array | 68662648 | closed | 0 | 4 | 2021-02-17T04:56:49Z | 2021-02-18T17:35:45Z | 2021-02-18T17:33:50Z | NONE | What happened: Hi all this is my first time positing I was working with an extremely large dataset and got it down to a manageable output and could not save the files. What you expected to happen: I was expecting a NetCDF file

** Example**: I don't have the NetCDF files for upload so I guess technically this is not reproducible. But to that here is what the code looks like and the error is below it: ```python import xarray as xr import netCDF4 as nc import numpy as np import netCDF4 as nc import dask import dask.array as da JunOne = nc.Dataset('.nc','r') JunTwo = nc.Dataset('.nc','r') JunThree = nc.Dataset('/.nc','r') JunFour = nc.Dataset('/.nc','r') JunFive = nc.Dataset('/.nc','r') JunSix = nc.Dataset('/nc','r') JunSeven = nc.Dataset('/nc','r') JunEight = nc.Dataset('/nc','r') JunNine = nc.Dataset('/.nc','r') June_ULC = da.from_array( [JunOne.variables['windspeed'][:,1001:1948,0:1300],JunTwo.variables['windspeed'][:,1001:1948,0:1300], JunThree.variables['windspeed'][:,1001:1948,0:1300],JunFour.variables['windspeed'][:,1001:1948,0:1300], JunFive.variables['windspeed'][:,1001:1948,0:1300], JunSix.variables['windspeed'][:,1001:1948,0:1300], JunSeven.variables['windspeed'][:,1001:1948,0:1300], JunEight.variables['windspeed'][:,1001:1948,0:1300], JunNine.variables['windspeed'][:,1001:1948,0:1300]] , chunks= (9, 720, 947, 1300) ) June_ULCVar = da.var((June_ULC), axis=0) June_ULCVarXr = xr.DataArray(June_ULCVar[:,:,:], dims=["Time", "south_north wdspd", "west_eastwdspd"]).astype('int16') June_ULCVarXr.attrs={'Conventions':'CF-1.0', 'title':'WindSpd', 'summary':'June 2017'} June_ULCVarXr.to_netcdf('/June_ULCVar.nc','a',format ="NETCDF4", ) ``` Anything else we need to know?: even trying to save June_ULCVarXr[1,1,1] gets this error... Environment: Python 3 Output of <tt>Error</tt>```python-traceback 1 June_ULCVarXr.to_netcdf('June_ULCVar.nc','a',format ="NETCDF4", ) ~/.conda/envs/myenv/lib/python3.8/site-packages/xarray/core/dataarray.py in to_netcdf(self, *args, **kwargs) 2662 dataset = self.to_dataset() 2663 -> 2664 return dataset.to_netcdf(*args, **kwargs) 2665 2666 def to_dict(self, data: bool = True) -> dict: ~/.conda/envs/myenv/lib/python3.8/site-packages/xarray/core/dataset.py in to_netcdf(self, path, mode, format, group, engine, encoding, unlimited_dims, compute, invalid_netcdf) 1642 from ..backends.api import to_netcdf 1643 -> 1644 return to_netcdf( 1645 self, 1646 path, ~/.conda/envs/myenv/lib/python3.8/site-packages/xarray/backends/api.py in to_netcdf(dataset, path_or_file, mode, format, group, engine, encoding, unlimited_dims, compute, multifile, invalid_netcdf) 1118 return writer, store 1119 -> 1120 writes = writer.sync(compute=compute) 1121 1122 if path_or_file is None: ~/.conda/envs/myenv/lib/python3.8/site-packages/xarray/backends/common.py in sync(self, compute) 153 # targets = [dask.delayed(t) for t in self.targets] 154 --> 155 delayed_store = da.store( 156 self.sources, 157 self.targets, ~/.conda/envs/myenv/lib/python3.8/site-packages/dask/array/core.py in store(sources, targets, lock, regions, compute, return_stored, **kwargs) 1020 1021 if compute: -> 1022 result.compute(**kwargs) 1023 return None 1024 else: ~/.conda/envs/myenv/lib/python3.8/site-packages/dask/base.py in compute(self, **kwargs) 277 dask.base.compute 278 """ --> 279 (result,) = compute(self, traverse=False, **kwargs) 280 return result 281 ~/.conda/envs/myenv/lib/python3.8/site-packages/dask/base.py in compute(*args, **kwargs) 559 postcomputes.append(x.__dask_postcompute__()) 560 --> 561 results = schedule(dsk, keys, **kwargs) 562 return repack([f(r, *a) for r, (f, a) in zip(results, postcomputes)]) 563 ~/.conda/envs/myenv/lib/python3.8/site-packages/distributed/client.py in get(self, dsk, keys, restrictions, loose_restrictions, resources, sync, asynchronous, direct, retries, priority, fifo_timeout, actors, **kwargs) 2662 Client.compute: Compute asynchronous collections 2663 """ -> 2664 futures = self._graph_to_futures( 2665 dsk, 2666 keys=set(flatten([keys])), ~/.conda/envs/myenv/lib/python3.8/site-packages/distributed/client.py in _graph_to_futures(self, dsk, keys, restrictions, loose_restrictions, priority, user_priority, resources, retries, fifo_timeout, actors) 2592 retries = {k: retries for k in dsk} 2593 -> 2594 dsk = highlevelgraph_pack(dsk, self, keyset) 2595 2596 # Create futures before sending graph (helps avoid contention) ~/.conda/envs/myenv/lib/python3.8/site-packages/distributed/protocol/highlevelgraph.py in highlevelgraph_pack(hlg, client, client_keys) 122 } 123 ) --> 124 return dumps_msgpack({"layers": layers}) 125 126 ~/.conda/envs/myenv/lib/python3.8/site-packages/distributed/protocol/core.py in dumps_msgpack(msg, compression) 182 """ 183 header = {} --> 184 payload = msgpack.dumps(msg, default=msgpack_encode_default, use_bin_type=True) 185 186 fmt, payload = maybe_compress(payload, compression=compression) ~/.conda/envs/myenv/lib/python3.8/site-packages/msgpack/__init__.py in packb(o, **kwargs) 33 See :class:`Packer` for options. 34 """ ---> 35 return Packer(**kwargs).pack(o) 36 37 msgpack/_packer.pyx in msgpack._cmsgpack.Packer.pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer.pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer.pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer._pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer._pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer._pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer._pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer._pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer._pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer._pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer._pack() msgpack/_packer.pyx in msgpack._cmsgpack.Packer._pack() ValueError: memoryview is too large --> ``` |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/4918/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

completed | 13221727 | issue |