issues

4 rows where state = "open", type = "issue" and user = 4711805 sorted by updated_at descending

This data as json, CSV (advanced)

Suggested facets: comments, created_at (date), updated_at (date)

| id | node_id | number | title | user | state | locked | assignee | milestone | comments | created_at | updated_at ▲ | closed_at | author_association | active_lock_reason | draft | pull_request | body | reactions | performed_via_github_app | state_reason | repo | type |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 406812274 | MDU6SXNzdWU0MDY4MTIyNzQ= | 2745 | reindex doesn't preserve chunks | davidbrochart 4711805 | open | 0 | 1 | 2019-02-05T14:37:24Z | 2023-12-04T20:46:36Z | CONTRIBUTOR | The following code creates a small (100x100) chunked ```python import xarray as xr import numpy as np n = 100 x = np.arange(n) y = np.arange(n) da = xr.DataArray(np.zeros(n*n).reshape(n, n), coords=[x, y], dims=['x', 'y']).chunk(n, n) n2 = 100000 x2 = np.arange(n2) y2 = np.arange(n2) da2 = da.reindex({'x': x2, 'y': y2}) da2 ``` But the re-indexed

Which immediately leads to a memory error when trying to e.g. store it to a

Trying to re-chunk to 100x100 before storing it doesn't help, but this time it takes a lot more time before crashing with a memory error:

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/2745/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | issue | ||||||||

| 414641120 | MDU6SXNzdWU0MTQ2NDExMjA= | 2789 | Appending to zarr with string dtype | davidbrochart 4711805 | open | 0 | 2 | 2019-02-26T14:31:42Z | 2022-04-09T02:18:05Z | CONTRIBUTOR | ```python import xarray as xr da = xr.DataArray(['foo']) ds = da.to_dataset(name='da') ds.to_zarr('ds') # no special encoding specified ds = xr.open_zarr('ds') print(ds.da.values) ``` The following code prints

The problem is that if I want to append to the zarr archive, like so: ```python import zarr ds = zarr.open('ds', mode='a') da_new = xr.DataArray(['barbar']) ds.da.append(da_new) ds = xr.open_zarr('ds') print(ds.da.values) ``` It prints If I want to specify the encoding with the maximum length, e.g:

It solves the length problem, but now my strings are kept as bytes:

It is not taken into account. The zarr encoding is The solution with |

{

"url": "https://api.github.com/repos/pydata/xarray/issues/2789/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | issue | ||||||||

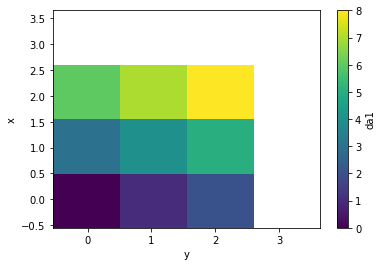

| 777670351 | MDU6SXNzdWU3Nzc2NzAzNTE= | 4756 | feat: reindex multiple DataArrays | davidbrochart 4711805 | open | 0 | 1 | 2021-01-03T16:23:01Z | 2021-01-03T19:05:03Z | CONTRIBUTOR | When e.g. creating a da1 = xr.DataArray([[0, 1, 2], [3, 4, 5], [6, 7, 8]], coords=[[0, 1, 2], [0, 1, 2]], dims=['x', 'y']).rename('da1')

da2 = xr.DataArray([[0, 1, 2], [3, 4, 5], [6, 7, 8]], coords=[[1.1, 2.1, 3.1], [1.1, 2.1, 3.1]], dims=['x', 'y']).rename('da2')

da1.plot.imshow()

da2.plot.imshow()

```python import numpy as np from functools import reduce def reindex_all(arrays, dims, tolerance): coords = {} for dim in dims: coord = reduce(np.union1d, [array[dim] for array in arrays[1:]], arrays[0][dim]) diff = coord[:-1] - coord[1:] keep = np.abs(diff) > tolerance coords[dim] = np.append(coord[:-1][keep], coord[-1]) reindexed = [array.reindex(coords, method='nearest', tolerance=tolerance) for array in arrays] return reindexed da1r, da2r = reindex_all([da1, da2], ['x', 'y'], 0.2)

dsr = xr.Dataset({'da1': da1r, 'da2': da2r})

dsr['da1'].plot.imshow()

dsr['da2'].plot.imshow()

```

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/4756/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | issue | ||||||||

| 415614806 | MDU6SXNzdWU0MTU2MTQ4MDY= | 2793 | Fit bounding box to coarser resolution | davidbrochart 4711805 | open | 0 | 2 | 2019-02-28T13:07:09Z | 2019-04-11T14:37:47Z | CONTRIBUTOR | When using coarsen, we often need to align the original DataArray with the coarser coordinates. For instance: ```python import xarray as xr import numpy as np da = xr.DataArray(np.arange(4*4).reshape(4, 4), coords=[np.arange(4, 0, -1) + 0.5, np.arange(4) + 0.5], dims=['lat', 'lon']) <xarray.DataArray (lat: 4, lon: 4)>array([[ 0, 1, 2, 3],[ 4, 5, 6, 7],[ 8, 9, 10, 11],[12, 13, 14, 15]])Coordinates:* lat (lat) float64 4.5 3.5 2.5 1.5* lon (lon) float64 0.5 1.5 2.5 3.5da.coarsen(lat=2, lon=2).mean() <xarray.DataArray (lat: 2, lon: 2)>array([[ 2.5, 4.5],[10.5, 12.5]])Coordinates:* lat (lat) float64 4.0 2.0* lon (lon) float64 1.0 3.0

da = adjust_bbox(da, {'lat': (2, -1), 'lon': (2, 1)}) <xarray.DataArray (lat: 6, lon: 4)>array([[ 0., 0., 0., 0.],[ 0., 1., 2., 3.],[ 4., 5., 6., 7.],[ 8., 9., 10., 11.],[12., 13., 14., 15.],[ 0., 0., 0., 0.]])Coordinates:* lat (lat) float64 5.5 4.5 3.5 2.5 1.5 0.5* lon (lon) float64 0.5 1.5 2.5 3.5da.coarsen(lat=2, lon=2).mean() <xarray.DataArray (lat: 3, lon: 2)>array([[0.25, 1.25],[6.5 , 8.5 ],[6.25, 7.25]])Coordinates:* lat (lat) float64 5.0 3.0 1.0* lon (lon) float64 1.0 3.0

|

{

"url": "https://api.github.com/repos/pydata/xarray/issues/2793/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

xarray 13221727 | issue |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issues] (

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[number] INTEGER,

[title] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[state] TEXT,

[locked] INTEGER,

[assignee] INTEGER REFERENCES [users]([id]),

[milestone] INTEGER REFERENCES [milestones]([id]),

[comments] INTEGER,

[created_at] TEXT,

[updated_at] TEXT,

[closed_at] TEXT,

[author_association] TEXT,

[active_lock_reason] TEXT,

[draft] INTEGER,

[pull_request] TEXT,

[body] TEXT,

[reactions] TEXT,

[performed_via_github_app] TEXT,

[state_reason] TEXT,

[repo] INTEGER REFERENCES [repos]([id]),

[type] TEXT

);

CREATE INDEX [idx_issues_repo]

ON [issues] ([repo]);

CREATE INDEX [idx_issues_milestone]

ON [issues] ([milestone]);

CREATE INDEX [idx_issues_assignee]

ON [issues] ([assignee]);

CREATE INDEX [idx_issues_user]

ON [issues] ([user]);

I have not found something equivalent. If you think this is worth it, I could try and send a PR to implement such a feature.

I have not found something equivalent. If you think this is worth it, I could try and send a PR to implement such a feature.