issue_comments

9 rows where issue = 1381955373 sorted by updated_at descending

This data as json, CSV (advanced)

Suggested facets: created_at (date), updated_at (date)

issue 1

- Merge wrongfully creating NaN · 9 ✖

| id | html_url | issue_url | node_id | user | created_at | updated_at ▲ | author_association | body | reactions | performed_via_github_app | issue |

|---|---|---|---|---|---|---|---|---|---|---|---|

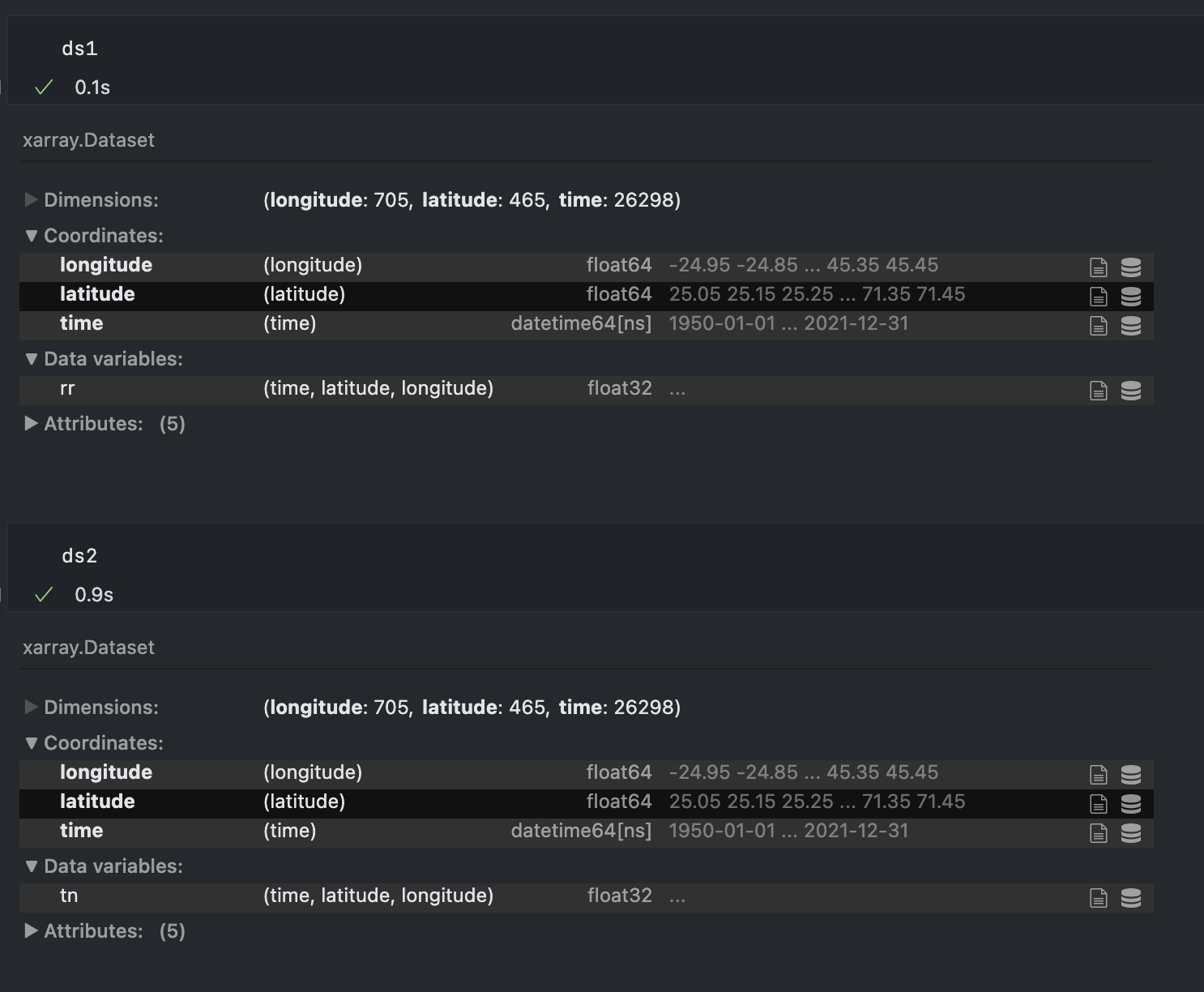

| 1260899163 | https://github.com/pydata/xarray/issues/7065#issuecomment-1260899163 | https://api.github.com/repos/pydata/xarray/issues/7065 | IC_kwDOAMm_X85LJ8tb | guidocioni 12760310 | 2022-09-28T13:16:13Z | 2022-09-28T13:16:13Z | NONE | Hey @benbovy, sorry for resurrect again this post but today I'm seeing the same issue and for the love of me I cannot understand what is the difference in this dataset that is causing the latitude and longitude arrays to be duplicated...

If I try to merge these two datasets I get one with lat lon doubled in size. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Merge wrongfully creating NaN 1381955373 | |

| 1255092548 | https://github.com/pydata/xarray/issues/7065#issuecomment-1255092548 | https://api.github.com/repos/pydata/xarray/issues/7065 | IC_kwDOAMm_X85KzzFE | guidocioni 12760310 | 2022-09-22T14:17:07Z | 2022-09-22T14:17:17Z | NONE |

The differences are larger than I would expect (order of 0.1 in some variables) but could be related to the fact that, when using different precisions, the closest grid points to the target point could change. This would eventually lead to a different value of the variable extracted from the original dataset. Unfortunately I didn't have time to verify if it was the case, but I think this is the only valid explanation because the variables of the dataset are untouched. It is still puzzling because, as the target points have a precision of e.g. (45.820497820, 13.003510004), I would expect the cast of the dataset coordinates from e.g. (45.8, 13.0) to preserve the 0 (45.800000000, 13.00000000), so that the closest point should not change. Anyway, I think we're getting off-topic, thanks for the help :) |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Merge wrongfully creating NaN 1381955373 | |

| 1255073449 | https://github.com/pydata/xarray/issues/7065#issuecomment-1255073449 | https://api.github.com/repos/pydata/xarray/issues/7065 | IC_kwDOAMm_X85Kzuap | benbovy 4160723 | 2022-09-22T14:04:22Z | 2022-09-22T14:05:56Z | MEMBER | Actually there's another conversion when you reuse an xarray dimension coordinate in array-like computations: ```python ds = xr.Dataset(coords={"x": np.array([1.2, 1.3, 1.4], dtype=np.float16)}) coordinate data is a wrapper around a pandas.Index object(it keeps track of the original array dtype)ds.variables["x"]._data PandasIndexingAdapter(array=Float64Index([1.2001953125, 1.2998046875, 1.400390625], dtype='float64', name='x'), dtype=dtype('float16'))This coerces the pandas.Index back as a numpy arraynp.asarray(ds.x) array([1.2, 1.3, 1.4], dtype=float16)which is equivalent tods.variables["x"]._data.array() array([1.2, 1.3, 1.4], dtype=float16)``` The round-trip conversion preserves the original dtype so different execution times may be expected. I can't tell much why the results are different (how much are they different?), but I wouldn't be surprised if it's caused by rounding errors accumulated through the computation of a complex formula like haversine. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Merge wrongfully creating NaN 1381955373 | |

| 1255026304 | https://github.com/pydata/xarray/issues/7065#issuecomment-1255026304 | https://api.github.com/repos/pydata/xarray/issues/7065 | IC_kwDOAMm_X85Kzi6A | guidocioni 12760310 | 2022-09-22T13:28:17Z | 2022-09-22T13:28:31Z | NONE | Mmmm that's weird, because the execution time is really different, and it would be hard to explain it if all the arrays are casted to the same Yeah, for the nearest lookup I already implemented "my version" of |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Merge wrongfully creating NaN 1381955373 | |

| 1255014363 | https://github.com/pydata/xarray/issues/7065#issuecomment-1255014363 | https://api.github.com/repos/pydata/xarray/issues/7065 | IC_kwDOAMm_X85Kzf_b | benbovy 4160723 | 2022-09-22T13:19:23Z | 2022-09-22T13:19:23Z | MEMBER |

I don't think so (at least not currently). The numpy arrays are by default converted to Regarding your nearest lat/lon point data selection problem, this is something that could probably be better solved using more specific (custom) indexes like the ones available in xoak. Xoak only supports point-wise selection at the moment, though. |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Merge wrongfully creating NaN 1381955373 | |

| 1254985357 | https://github.com/pydata/xarray/issues/7065#issuecomment-1254985357 | https://api.github.com/repos/pydata/xarray/issues/7065 | IC_kwDOAMm_X85KzY6N | guidocioni 12760310 | 2022-09-22T12:56:35Z | 2022-09-22T12:56:35Z | NONE | Sorry, that brings me to another question that I never even considered. As my latitude and longitude arrays in both datasets have a resolution of 0.1 degrees, wouldn't it make sense to use From this dataset I'm extracting the closest points to a station inside a user-defined radius, doing something similar to

The thing is, if I use |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Merge wrongfully creating NaN 1381955373 | |

| 1254983291 | https://github.com/pydata/xarray/issues/7065#issuecomment-1254983291 | https://api.github.com/repos/pydata/xarray/issues/7065 | IC_kwDOAMm_X85KzYZ7 | benbovy 4160723 | 2022-09-22T12:54:43Z | 2022-09-22T12:54:43Z | MEMBER |

Not 100% sure but maybe

We already do this for label indexers that are passed to |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Merge wrongfully creating NaN 1381955373 | |

| 1254941693 | https://github.com/pydata/xarray/issues/7065#issuecomment-1254941693 | https://api.github.com/repos/pydata/xarray/issues/7065 | IC_kwDOAMm_X85KzOP9 | guidocioni 12760310 | 2022-09-22T12:17:10Z | 2022-09-22T12:17:10Z | NONE | @benbovy you have no idea how much time I spent trying to understand what the difference between the two different datasets was....and I completely missed the The problem is that I tried to merge with Before closing, just a curiosity: in this corner case shouldn't |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Merge wrongfully creating NaN 1381955373 | |

| 1254862548 | https://github.com/pydata/xarray/issues/7065#issuecomment-1254862548 | https://api.github.com/repos/pydata/xarray/issues/7065 | IC_kwDOAMm_X85Ky67U | benbovy 4160723 | 2022-09-22T10:58:10Z | 2022-09-22T10:58:36Z | MEMBER | Hi @guidocioni. I see that the longitude and latitude coordinates both have different Here's a small reproducible example: ```python import numpy as np import xarray as xr lat = np.random.uniform(0, 40, size=100) lon = np.random.uniform(0, 180, size=100) ds1 = xr.Dataset( coords={"lon": lon.astype(np.float32), "lat": lat.astype(np.float32)} ) ds2 = xr.Dataset( coords={"lon": lon, "lat": lat} ) ds1.indexes["lat"].equals(ds2.indexes["lat"]) Falsexr.merge([ds1, ds2], join="exact") ValueError: cannot align objects with join='exact' where index/labels/sizesare not equal along these coordinates (dimensions): 'lon' ('lon',)``` If coordinates labels differ only by their encoding, you could use |

{

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} |

Merge wrongfully creating NaN 1381955373 |

Advanced export

JSON shape: default, array, newline-delimited, object

CREATE TABLE [issue_comments] (

[html_url] TEXT,

[issue_url] TEXT,

[id] INTEGER PRIMARY KEY,

[node_id] TEXT,

[user] INTEGER REFERENCES [users]([id]),

[created_at] TEXT,

[updated_at] TEXT,

[author_association] TEXT,

[body] TEXT,

[reactions] TEXT,

[performed_via_github_app] TEXT,

[issue] INTEGER REFERENCES [issues]([id])

);

CREATE INDEX [idx_issue_comments_issue]

ON [issue_comments] ([issue]);

CREATE INDEX [idx_issue_comments_user]

ON [issue_comments] ([user]);

user 2